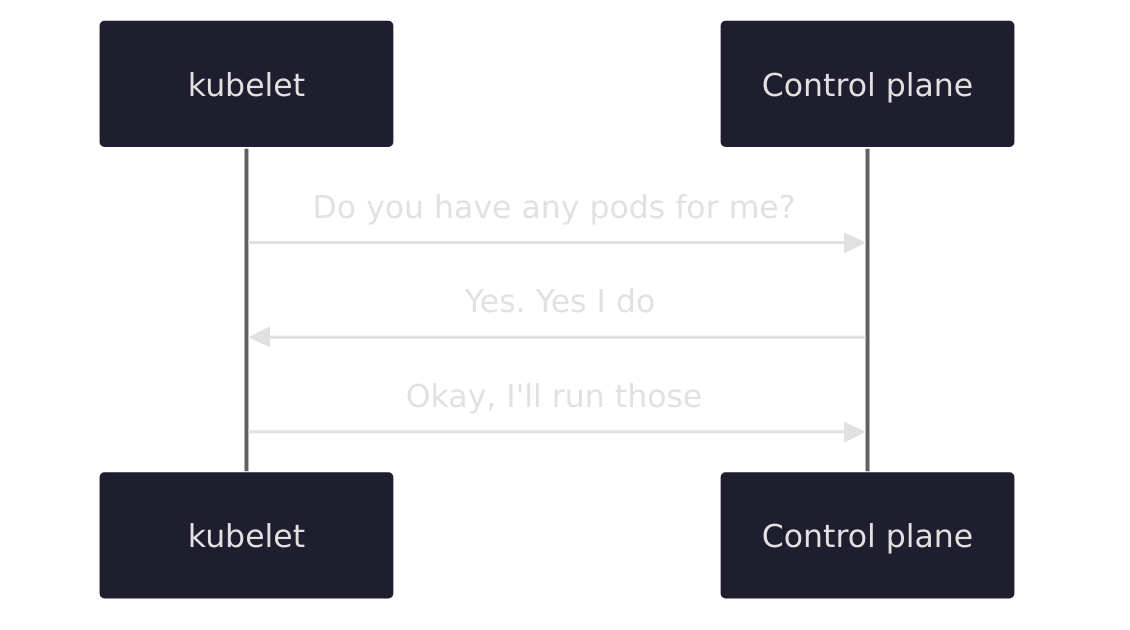

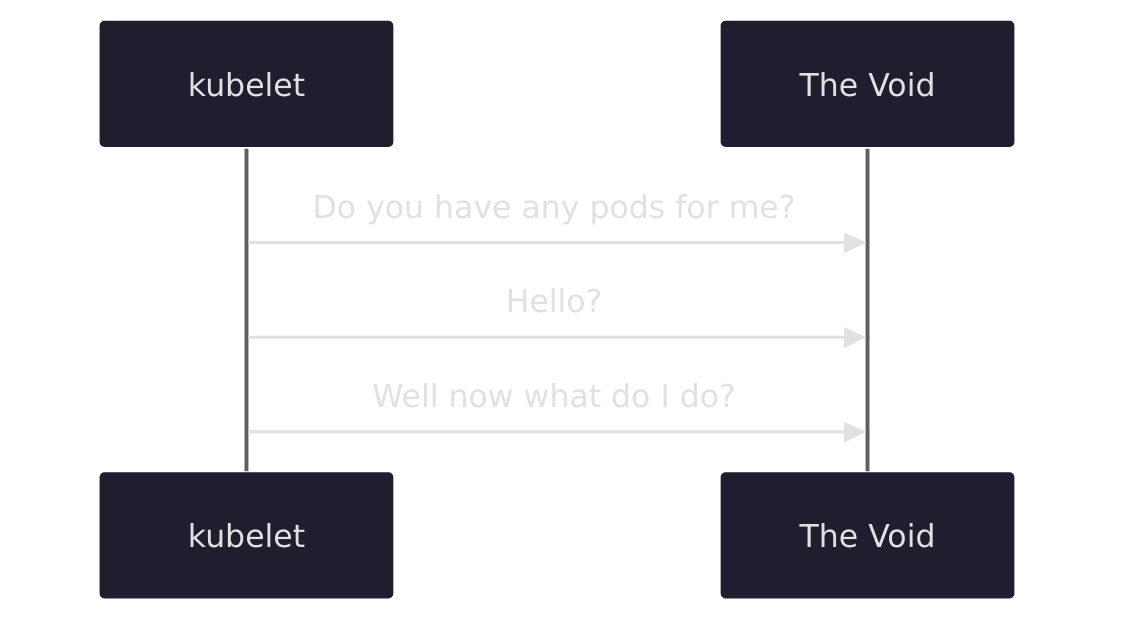

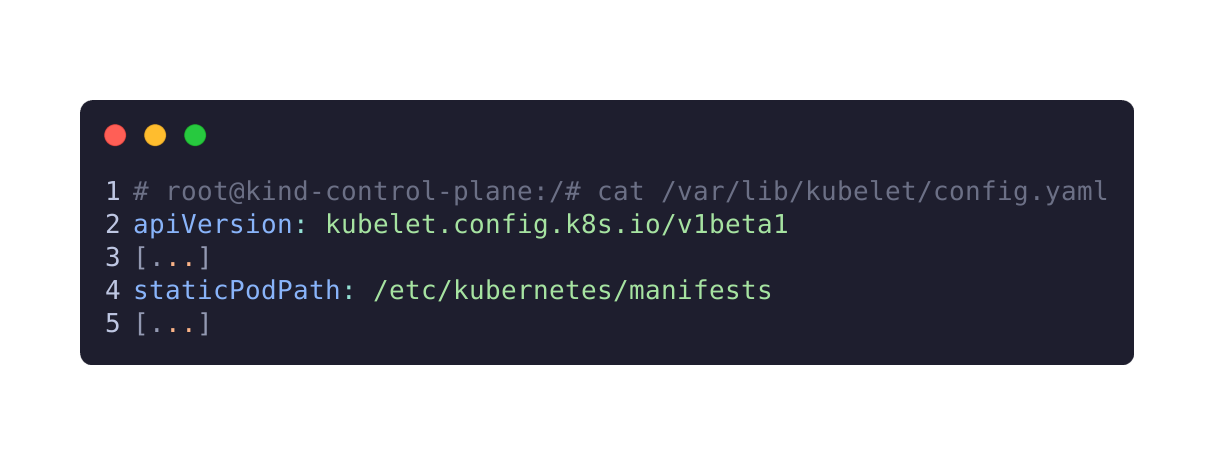

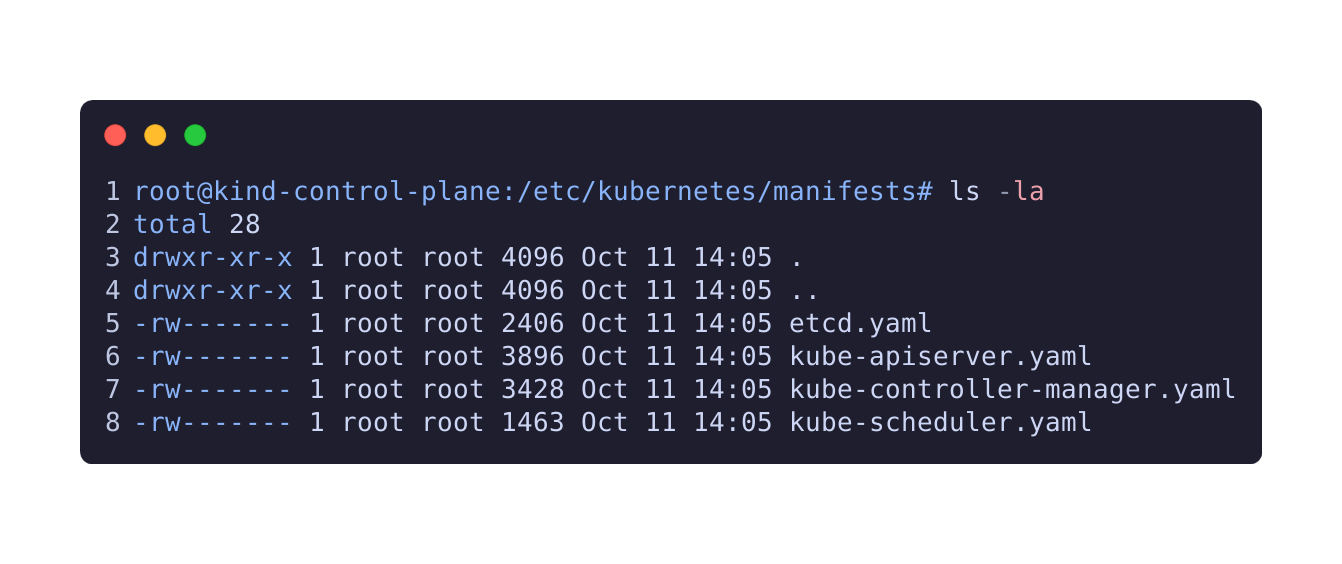

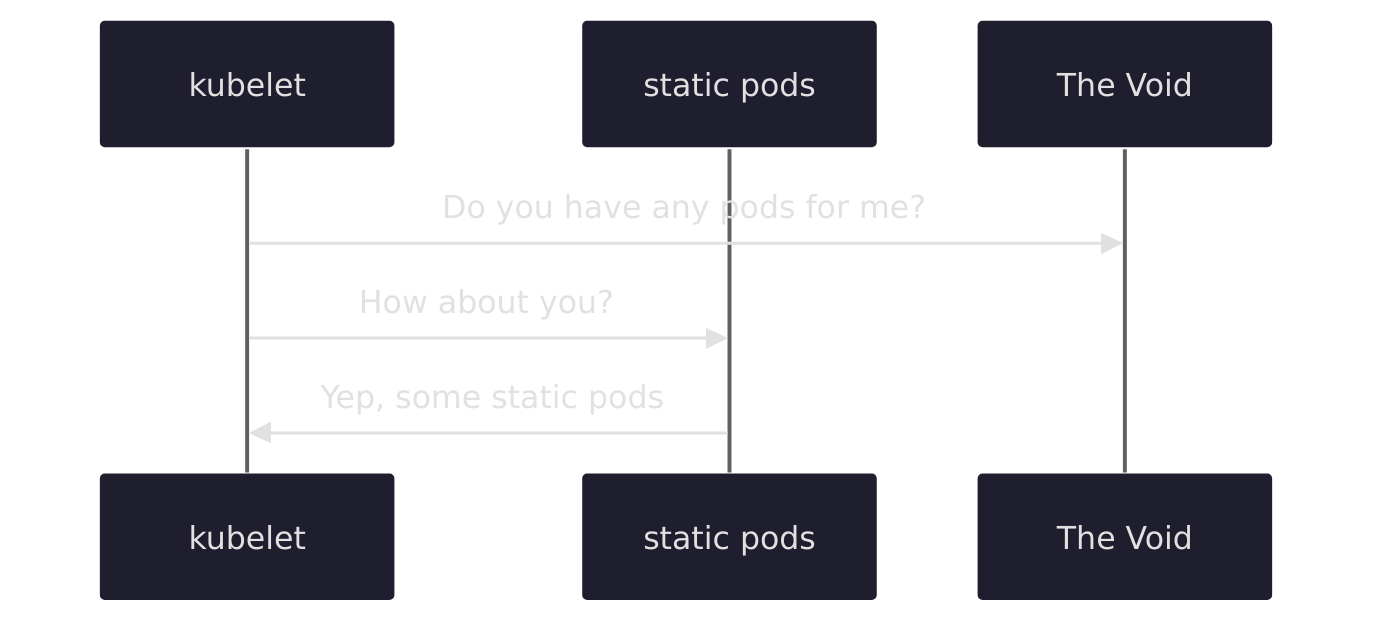

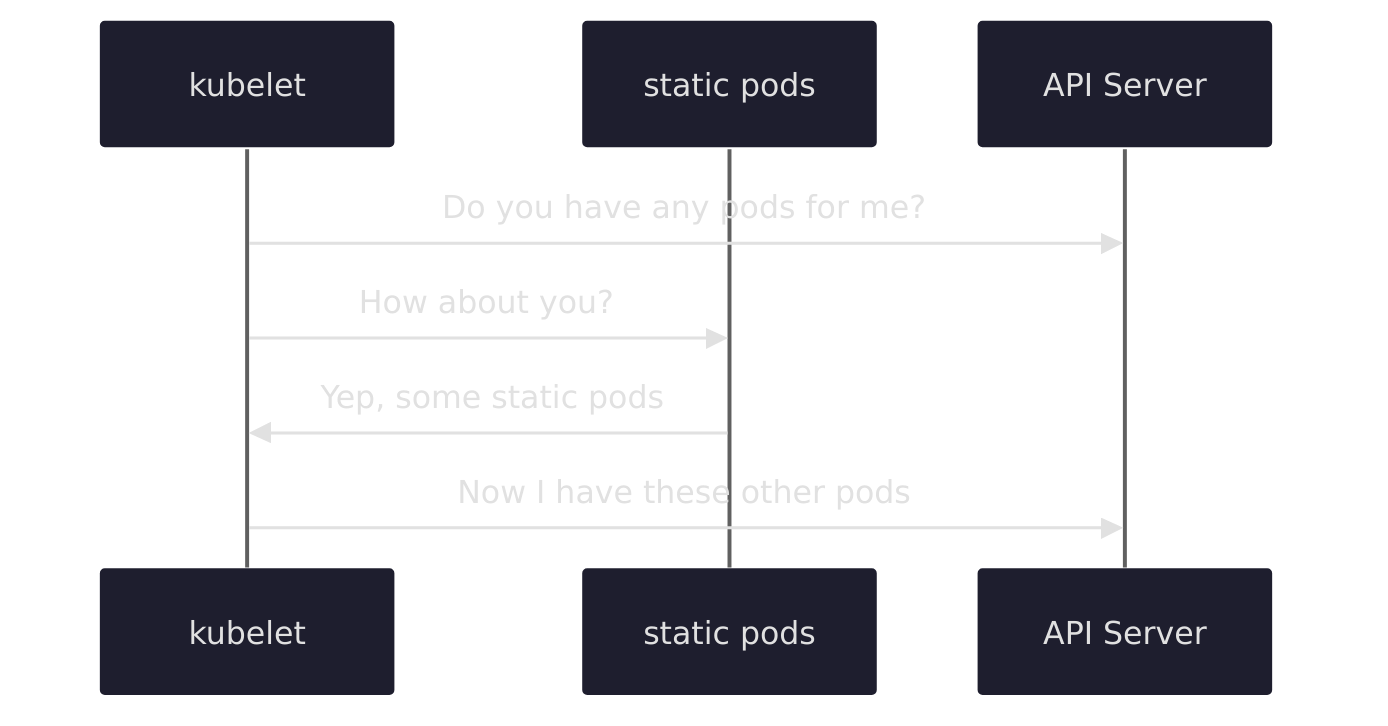

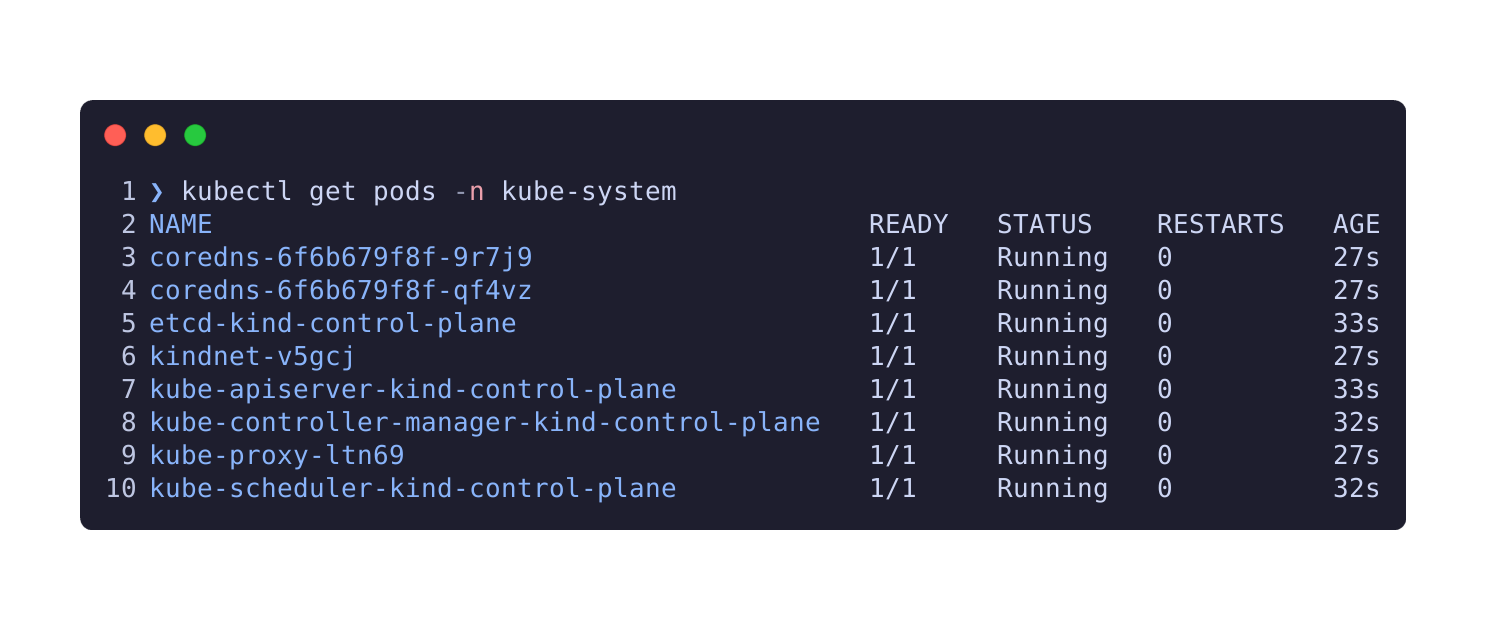

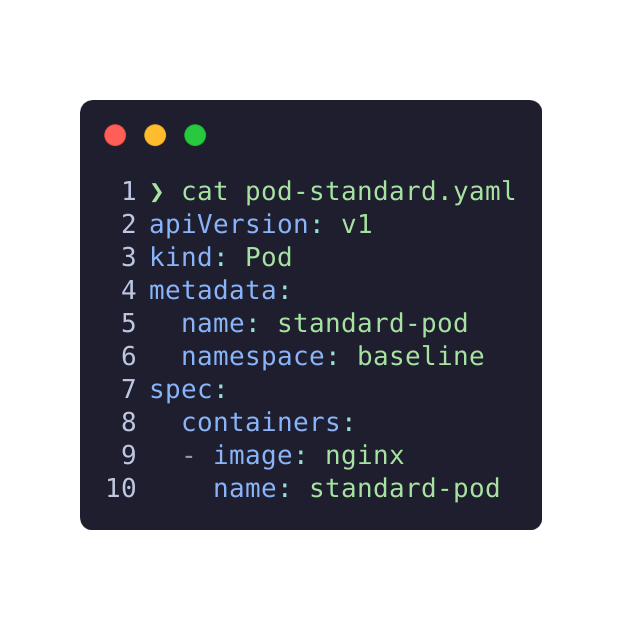

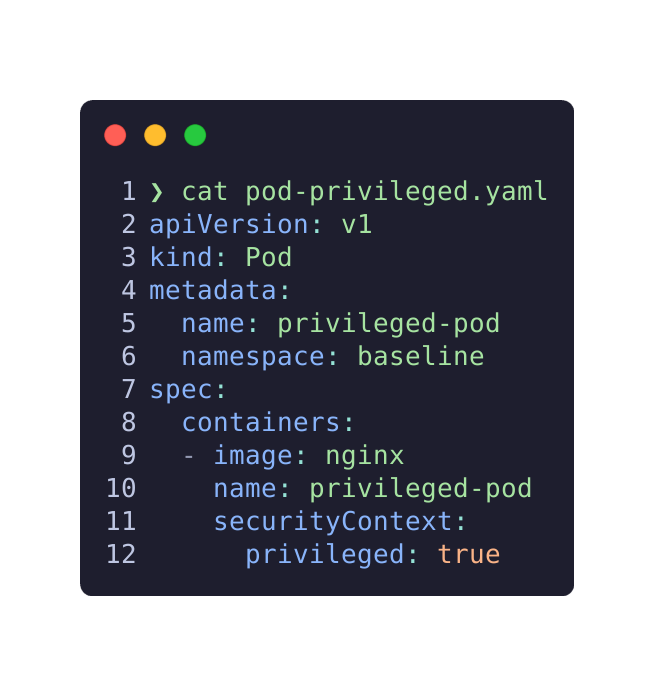

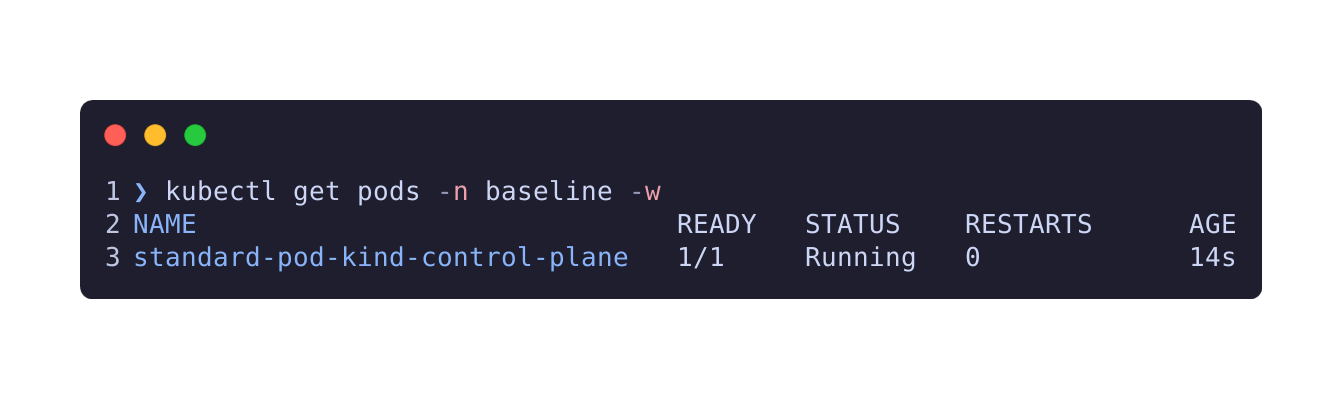

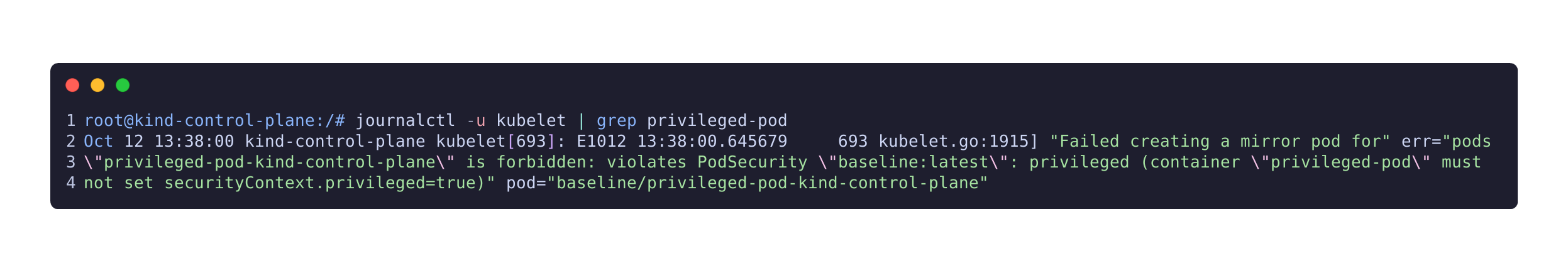

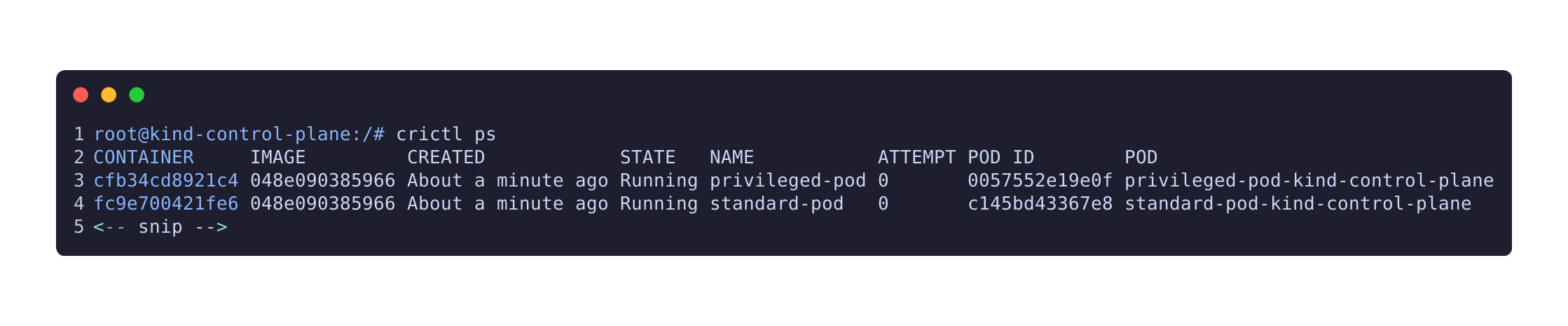

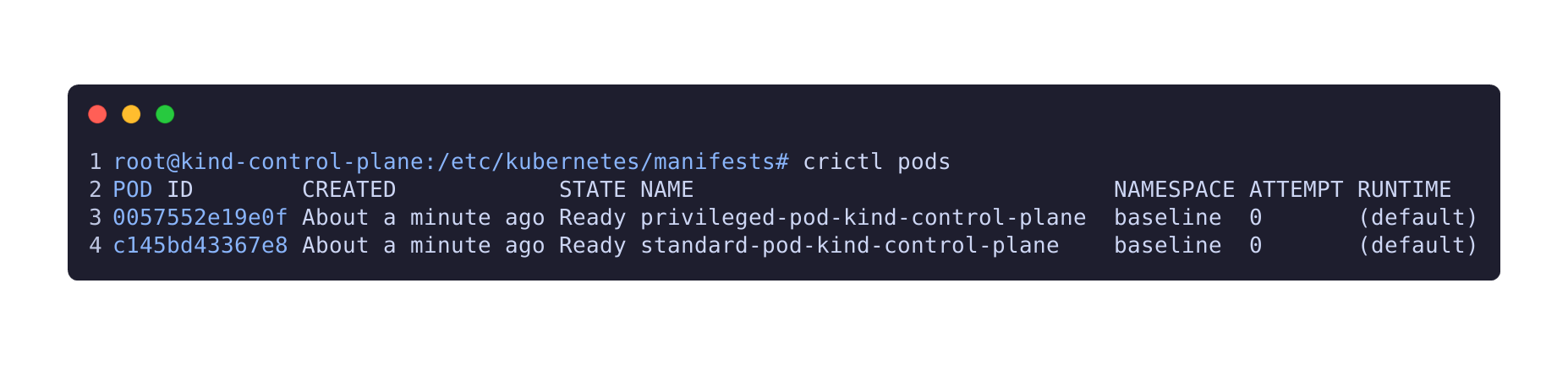

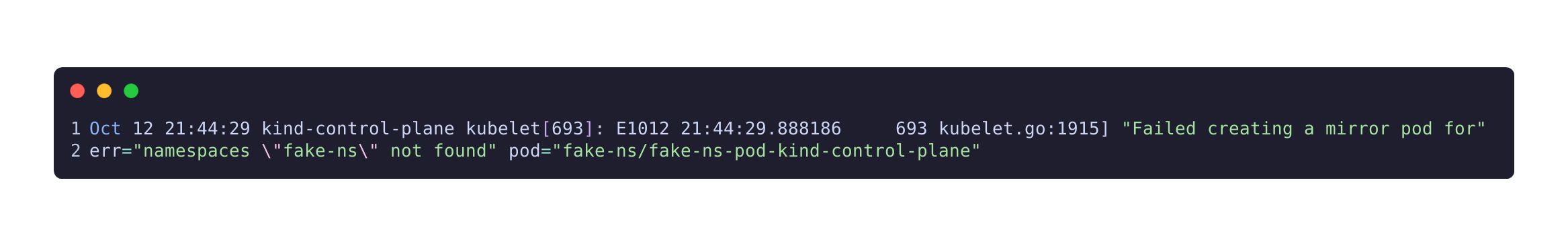

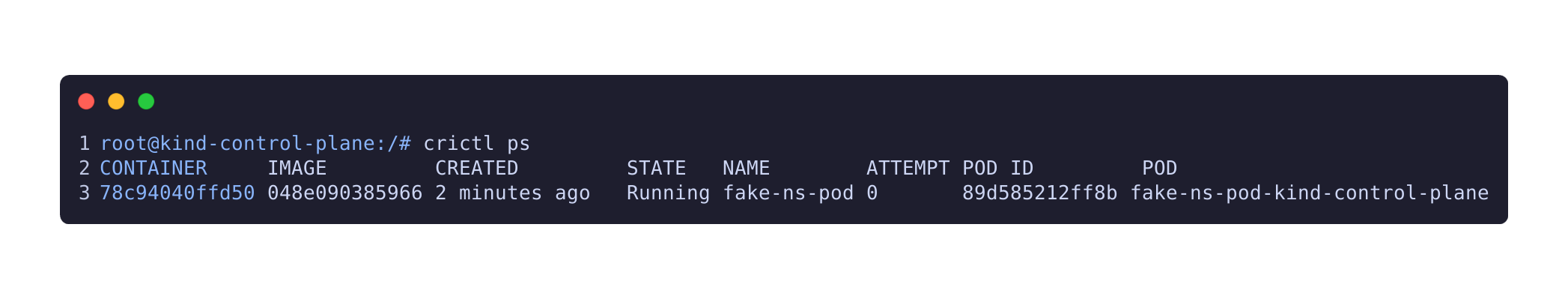

<!-- slide bg="[[img/Backgrounds-01.png]]" --> # Static Pods Are Weird ## Iain Smart, AmberWolf ### Cloud Native and Kubernetes Edinburgh ### May 2025 --- ## Whoami - Principal Consultant at AmberWolf - Cloud Native Offensive Security - K8S SIG-Security Member / Third Party Audit Subproject Lead Note: Iain does hacky things, likes pipelines, loses weekends to technical rabbitholes. AmberWolf is a red teaming and penetration testing firm. Before AW, CP and NCC. SIG-Security is a group of people. --- ## Agenda - What are Static Pods? - Why are these interesting? Note: Introduction to static pods, what they are, and why we care about them. Quick poll of audience to gauge interest and existing knowledge. --- ## Bootstrapping Kubernetes often runs the control plane as pods Notes: Deployments like Kubeadm run control plane components, including etcd and the api server, in pods themselves. Alleged (non-checked) fun fact: bootstraps weren't popularised until ~1870. Bootstrap Bill in PoTC existed in 1700. ---  Notes: This diagram shows a typical pod creation flow (massively simplified, obviously). --- ### But the control plane determines which pods run Notes: Who can see the problem here? (next slide has a diagram to show the problem) ---  Notes: Without some other approach, no pods would ever be able to start, because nothing would be present to make pods run. --- ### So how do we make the control plane pods run? Notes: Hopefully people can work out the answer at this point, given my talk title. --- ## Static Pods Notes: The kubelet can run static pods from a dedicated location This can be a directory or a web server From a security perspective, please please please keep this location secure. The docs say you need to restart the kubelet. That's not the case anymore. https://kubernetes.io/docs/tasks/configure-pod-container/static-pod/ Fun fact: This isn't currently in the CIS benchmarks ---  Notes: This is the default configuration for a KinD cluster. It's the same on Kubeadm, and I've never seen this value be different in production clusters. Not every cluster will make use of static pods, but this value is generally populated. ---  Notes: Again, this is a clean KinD cluster, straight after install. Fun fact: if you tried to edit files in this directory using vim, you could break clusters due to .swp files and inodes. https://github.com/kubernetes/kubernetes/issues/44450 ---  Notes: Showing that the kubelet can check in with the static location as well as the API Server for something to do ---  Notes: Showing that we the kubelet can talk to the controlplane once it comes online --- ### Pods are running. How do we see them? ---  Notes: These are mirror pods, which are a specific instance of a pod type created to track statically created pods in the API Server. Each pod is named with the value specified in the yaml file, suffixed with the name of the node the pod was created on. Note 4 pods in the output below which end with `kind-control-plane` and correspond to the manifests above. --- ### These are pods, and we can restrict pods Notes: We need to consider two attack vectors: node compromise leading to cluster admin, and pod creation leading to node compromise. The latter is less of a concern in most deployments, as only root should have access to these directories. Exception is using web locations. General advice is not to do this. Static pods cannot use Kubernetes resources like secrets or configmaps, preventing some escalation paths. --- ## Admission Control - Node Authorization - Pod Security Standards - ValidatingAdmissionPolicy - Third Party Notes: Node Authorization: Created pods aren't restricted to specific namespaces. Pod Security Standards: OpenShift SCC is an acceptable(ish) alternative. Pod Security Policy is not. Quick vote: Are static pods affected by admission control? Make this a discussion: For those of you who think this is affected, question how? Preflight request? --- ### Let's try it out Notes: I'm not doing a live demo, screenshots will have to do. Manifests are available if you want to try it yourself. --- <div id="left">  </div> <div id="right">  </div> Notes: These are the manifests I used to deploy test pods. Note that they're both in the same namespace, which is labelled for PSS Baseline. ---  Notes: Only one pod is running, so that answers that question, right? The privileged pod wasn't created. Let's check the kubelet's logs to be sure. ---  Notes: This is the journalctl logs showing that the standard pod started successfully ---  Notes: The pod wasn't able to register on the API Server, but the container itself is running. This certainly looks like the container wasn't created, as there was no pod startup, but something still doesn't seem right. Let's confirm there's definitely no container running on the node. ---  Notes: The pod wasn't able to register on the API Server, but the container itself is running. ---  Notes: We can confirm this again by checking the pod ID rather than the container ID ---  Notes: Yep, definitely running. We can confirm this another way by using the kubelet API (accessed, in this case, using the wonderful [Kubeletctl](https://github.com/cyberark/kubeletctl/)): Aside: The kubelet API isn't massively well documented. The best source for the endpoints seems to be the kubeletctl source code and documentation, or the kubelet source code. --- ## Non-existent Namespaces Notes: We've confirmed that running a pod that can't get past admission control will run as a static node on the pod, but not as a mirror pod in the Kubernetes API Server. How about other attempts to create a local container that won't be recognised by the API Server? ---  Notes: When we try to create a pod in a namespace that doesn't exist, two errors are returned. One is from `kubelet.go`, and one is from `event.go`, both detailing that the API Server rejected the request for the pod to be created. However, as with the admission control example, we can see that the pod was successfully started. ---  Notes: As with the previous example, we can execute commands inside the running container through Kubeletctl or by talking directly to the kubelet with curl, but not through `kubectl exec`. --- ## Uses Notes: It's probably fair to say that creating pods which can't be viewed by cluster administrators through kubectl is not a useful behaviour in day-to-day running of a cluster. I can't think of any legitimate uses of this behaviour, but I do think it would be useful to someone trying to hide a running workload on a Kubernetes cluster they've compromised. Not that I'd [talk about that](/talks/2024-03-20-kubecon-eu-ill-let-myself-in/) or anything. --- ### Questions - iain@iainsmart.co.uk - @smarticu5{.bsky.social} - [https://www.linkedin.com/in/iainsmart/](https://www.linkedin.com/in/iainsmart/) --- ## References [Blog Post this was based on](https://blog.iainsmart.co.uk/posts/2024-10-13-mirror-mirror/) [GitHub Manifests](https://github.com/smarticu5/kubernetes_manifests/tree/main/pocs/kubelet-static-manifests) [Walls Within Walls: What if Your Attacker Knows Parkour? - Tim Allclair & Greg Castle, Google](https://www.youtube.com/watch?v=6rMGRvcjvKc) [Node Authorization](https://kubernetes.io/docs/reference/access-authn-authz/node/) [Pod Security Standards](https://kubernetes.io/docs/concepts/security/pod-security-standards/)